Artificial intelligence (AI) has long been part of our everyday lives. It assists with translating, helps with online searches or answers questions in the form of chatbots.

You can find more information about AI in this blog under the tag KI.

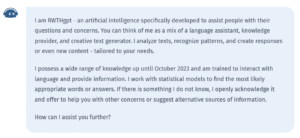

But what exactly is AI and how does it work? To better understand this, we asked RWTHgpt, our AI-supported chatbot. Who, if not him, could better explain how AI works? In the following graphic, RWTHgpt describes itself:

What Does This Mean for Us?

So much for the self-description of AI. Sounds fascinating? It is!

In the next step, we’ll take a look together at key terms relating to artificial intelligence. What does “prompt engineering” mean? What is behind an “LLM”? And why is the “token limit” so important? We explain the most important terms – simply, understandably and to the point. Let’s start with an explanation of AI models and bots in general.

What is a generative AI model?

Generative AI models are programmes that can create new content based on existing data – such as text, images, music or even videos. They learn patterns and structures from large amounts of data and generate new content that is similar to what they have learnt. One of the best-known models of this type is GPT (Generative Pre-trained Transformer).

Chatbot vs. bot – what’s the difference?

A bot is a general term for software that performs tasks automatically – such as searching the internet. A chatbot, on the other hand, specialises in communicating with people, for example in customer service or on websites. Modern chatbots such as RWTHgpt or Ritchy use language models to conduct human-like conversations.

Most Important AI Terms

Prompt, output and prompt engineering

A prompt is the input that is given to an AI, for example a question or instruction. The output is the answer or the result that is generated in response. How good this answer is depends largely on how cleverly the prompt was formulated. This is why there is now even prompt engineering – the art of designing inputs in such a way that the AI model delivers optimal results.

What is behind LLM?

LLM stands for Large Language Model. These models have been trained with enormous amounts of text in order to understand and generate natural language. They form the basis for many modern AI applications, such as translations, text generation or summaries.

Token, token limit and why this is important

In AI systems such as GPT, text is broken down into small units known as tokens. These can be words, parts of words or punctuation marks. The token limit describes how many such units the system can process at once. If this limit is exceeded, the text must be shortened or split up. This has a direct impact on the costs and efficiency of an AI request.

What is a system prompt?

In addition to the user prompt, there is also the system prompt: this is a hidden instruction to the AI on how it should behave – for example, whether it should answer factually, creatively or particularly briefly. These system instructions influence the behaviour of the model in the background.

Open source models – AI for everyone?

Some AI models are published as open source. This means that their source code and, in some cases, their training data are publicly available. Developers can further develop, adapt and improve these models. This promotes transparency, innovation and the independent use of AI, even beyond large tech companies.

How AI Learns from Data

Behind this is machine learning (ML) – a sub-area of AI in which computers learn from data without being explicitly programmed for each task. Models such as GPT are trained with huge amounts of text. In doing so, they recognise patterns, structures and analyse the context of meaning and use this knowledge to understand language and generate new content.

But as powerful as these models are: Their knowledge is limited to the time of training. They cannot know information that was published later and sometimes get facts wrong.

This is where Retrieval Augmented Generation (RAG) comes into play: this approach combines a language model with an external knowledge source, for example a database, website or document archive. Before the model generates an answer, it searches specifically for suitable information and actively incorporates this into the answer.

The result: more up-to-date, fact-based and precise answers – particularly valuable in research, teaching and complex application areas. [1]

How Our Support Chatbot Ritchy Also Works

Ritchy not only uses powerful language models from Microsoft Azure OpenAI, but also uses RAG technology to access the content of our IT Centre Help documentation portal in real time. The answers are therefore not based on the internet, but on verified, context-related expertise – reliable, up-to-date and available around the clock.

AI Can Be This Simple

The world of artificial intelligence is fascinating and sometimes quite technical. But with the right basic understanding, terms such as LLM, prompt or token limit are easy to decipher. And it is precisely this understanding that helps to better assess the potential of AI and utilise it responsibly.

Responsible for the content of this article are Hania Eid and Melisa Berisha.

Hallo IT Center Blog Team,

vielen Dank für diesen tollen Artikel. Das ist wirklich ein sehr gelungener Einstieg, noch dazu in nutzerfreundlicher Sprache, und um das Thema ein wenig für sich sortiert zu bekommen. Super hilfreich! Gerne mehr davon 🙂

Hallo Uschi,

vielen Dank für dein positives Feedback! Es freut uns sehr zu hören, dass dir der Artikel gefallen hat und du ihn als hilfreich empfindest. Wir werden unser Bestes tun, um weiterhin interessante und nutzerfreundliche Inhalte zu erstellen. Wenn du spezielle Themenvorschläge hast, lass es uns gerne wissen!

Viele Grüße

das IT Center Blog Team

Danke für die Erklärung.

Danke für den spannenden Artikel. Gibt es irgendwo eine Art Glossar, wo die Begriffe nachgeschlagen werden können? Es ist ja wirklich viel Input.

In der mobilen Ansicht ist die Antwort der KI nicht lesbar . Das Bild ist nicht zu vergrößern . Sehr schade.

Auch der riesige Absatz nach dem Bild ist nicht schön.

Hallo SommerSonneSonnenschein,

vielen Dank für dein Feedback. Wir freuen uns, dass du die Inhalte spannend findest! Zu deiner Frage nach einem Glossar: Momentan haben wir leider kein spezielles Glossar. Wir nehmen deine Anregung jedoch gerne auf und werden prüfen, wie wir unsere Leserschaft in Zukunft besser dabei unterstützen können, Begriffe nachzuschlagen.

Bezüglich der mobilen Ansicht bedauern wir, dass es Schwierigkeiten bei der Lesbarkeit gibt. Wir arbeiten ständig daran, unseren Blog zu optimieren und werden dein Feedback in unsere Überlegungen einfließen lassen.

Solltest du weitere Anregungen oder Fragen haben, zögere bitte nicht, dich bei uns zu melden.

Viele Grüße

Das IT Center Blog Team

Hallo SommerSonneSonnenschein,

hier ein Update zu unserem ersten Kommentar: Wir haben den Zoom des Bildes noch ein mal geprüft und konnten feststellen, dass dieser sich von Endgerät zu Endgerät unterschiedlich verhält. Probiere es also gerne an einem Endgerät aus. Wir werden in der Zwischenzeit herausfinden, woran das liegen könnte.

Bezüglich des Wunsches nach einem Glossar, können wir stolz mitteilen, dass nun einer auf IT Center Help veröffentlicht wurde. Dieser ist unter folgendem Link zu finden: https://help.itc.rwth-aachen.de/service/1808737e10424937b76e564ed15d8028/article/fe975ab86a154e5ab2fb845b30064d64/

Viele Grüße

Das IT Center Blog Team